Generative AI

In humanoid robotics, generative AI is becoming the link between perception and action. These models don’t just label data but produce it — trajectories, grasp plans, motion sequences, and even control policies — from images, language, or sensor input.

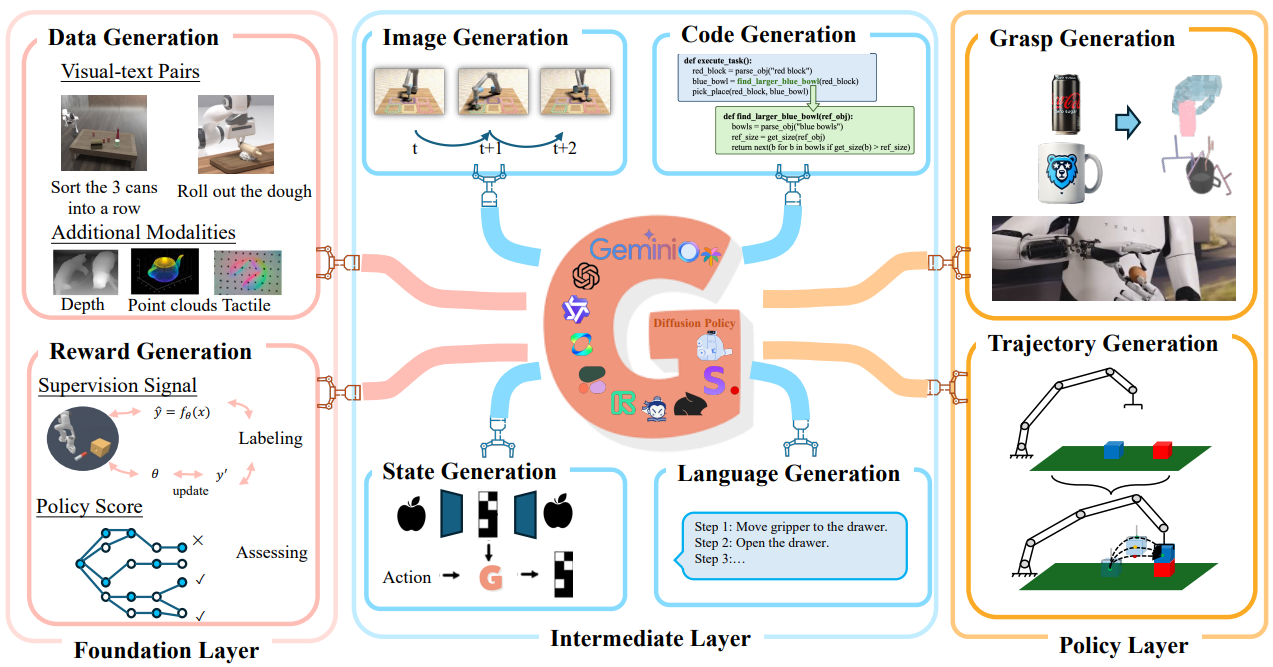

A 2024 survey on robotic manipulation outlined a three-layer framework: foundation models provide broad pretraining; intermediate models translate across modalities (like vision to code); and policy generators output actionable control sequences.

Generative models now power every stage of robot learning — producing synthetic training data, generating perception-to-action mappings, and creating control policies that can be executed on hardware. Source: Generative Artificial Intelligence in Robotic Manipulation: A SurveyRecent systems use vision-language models to produce zero-shot behaviors, placing unseen objects, adapting to novel instructions, and writing control logic on the fly. The frontier is embodied improvisation: agents that don’t just execute plans, but generate them in real time.