Manipulation

Manipulation refers to a robot’s ability to influence the world through intentional motion. In humanoid robotics, this includes object handling — grasping, lifting, pushing, and placing — as well as higher-order actions like stacking, tool use, or task sequencing. It requires tight integration between vision, control, contact dynamics, and increasingly, language.

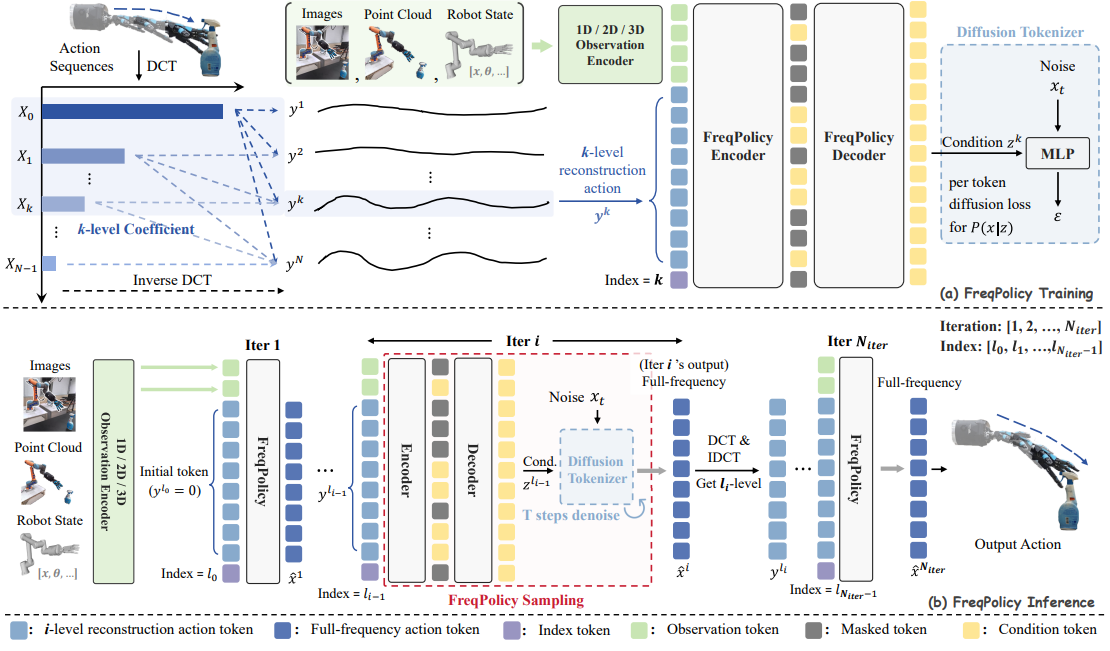

Modern systems use end-to-end visuomotor policies that generalize across tasks. Transformer-based models like RT-X support shared control across robot types and scenarios, while RT-Trajectory adds temporal structure for more fluid motion. Recent research explores novel representations: for instance, FreqPolicy models motion in the frequency domain to enable high-fidelity control from compact latent codes.

Structure in motion: FreqPolicy uses frequency-based trajectory modeling to synthesize smooth, task-aware manipulation from learned codes. Source: FreqPolicy: Frequency Autoregressive Visuomotor Policy with Continuous Tokens

The frontier of manipulation is predictive, multitask, and context-aware: robots that don’t just move things, but anticipate, adapt, and execute with purpose.