Natural Language Processing

NLP allows humanoid robots to understand and respond to spoken or written instructions. Early systems relied on keyword spotting or intent matching. Today’s models — often based on fine-tuned language transformers — can interpret high-level prompts like “clean up the mess” or “find the red cup,” and translate them into executable actions.

The frontier is semantic grounding. Robots now combine language models with vision and spatial memory to connect words with physical objects, places, and goals. This enables zero-shot reasoning, where new commands are understood without explicit pretraining.

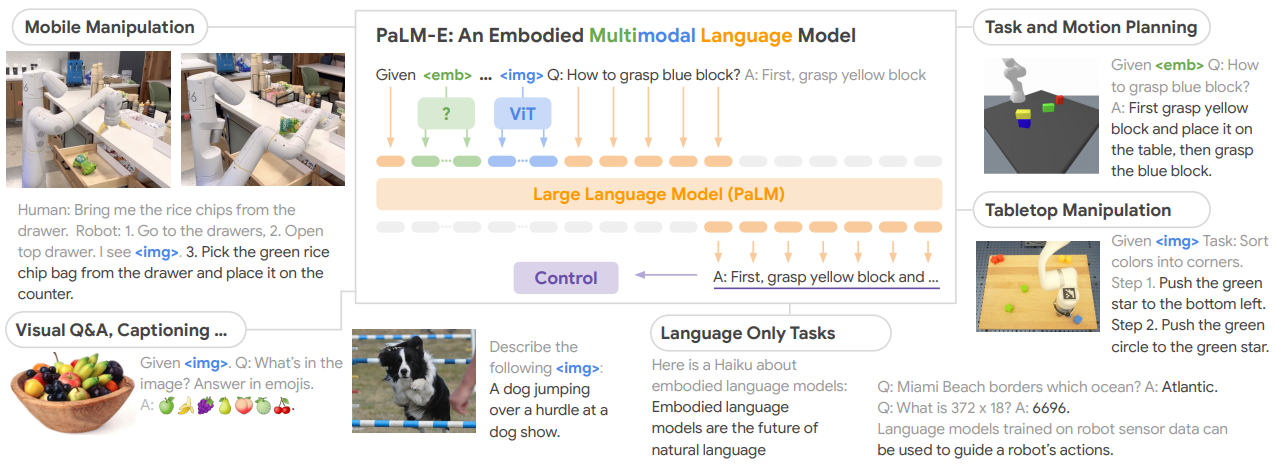

One approach fuses language, visual input, and robot state into a unified model, allowing robots to interpret complex instructions and execute multi-step tasks with context awareness.

Multimodal models combine speech, visual data, and proprioception to plan and execute grounded actions. Source: PaLM-E: An Embodied Multimodal Language Model

The next challenge is making NLP fast, safe, and robust enough for dynamic physical environments.