Voice Interaction

Voice interfaces turn spoken language into robotic behavior. For humanoids, this enables hands-free control, natural delegation, and access for non-technical users.

Today’s pipelines use automatic speech recognition (ASR) paired with large language models to process open-ended instructions and generate grounded, executable actions.

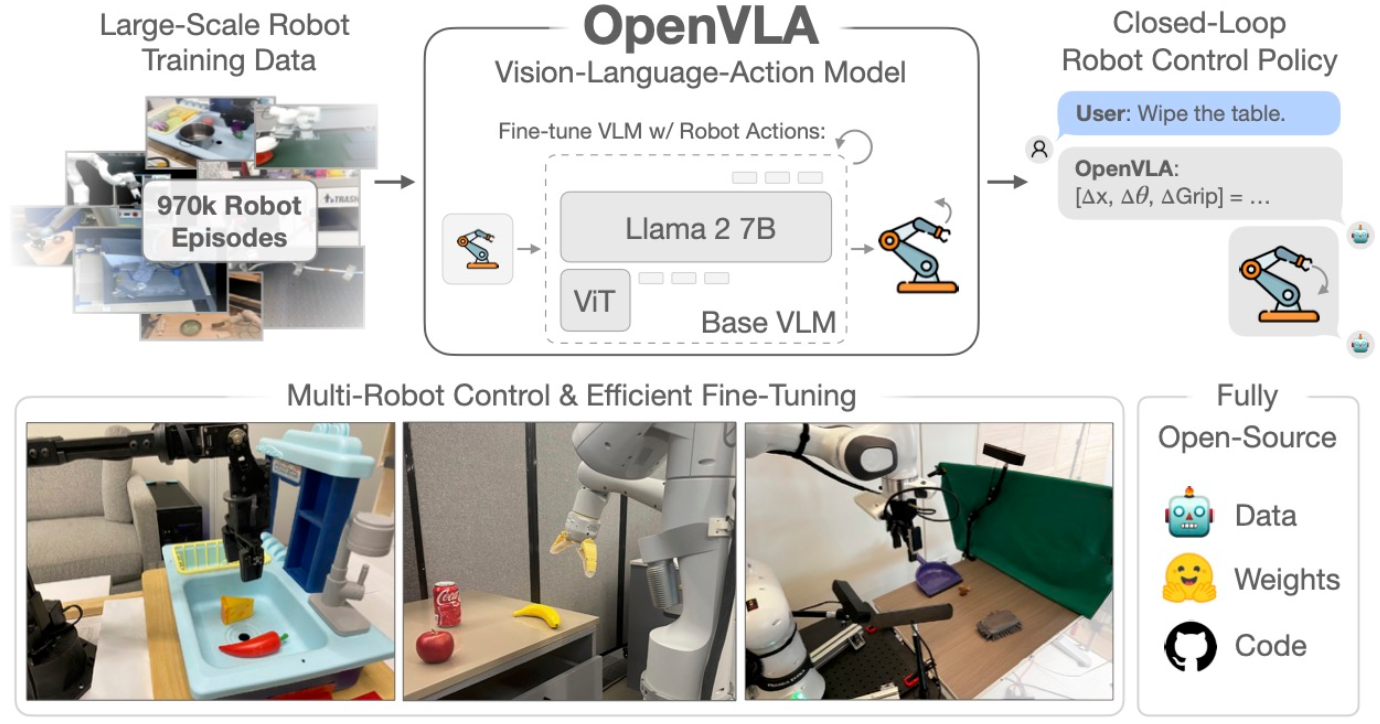

An example of this approach, OpenVLA turns natural language—including voice commands—into real-world robot behavior, integrating perception, intent parsing, and task planning. Source: OpenVLA: An Open-Source Vision-Language-Action Model

The challenge now is grounding language in physical space, resolving ambiguity, and minimizing latency for real-time use.