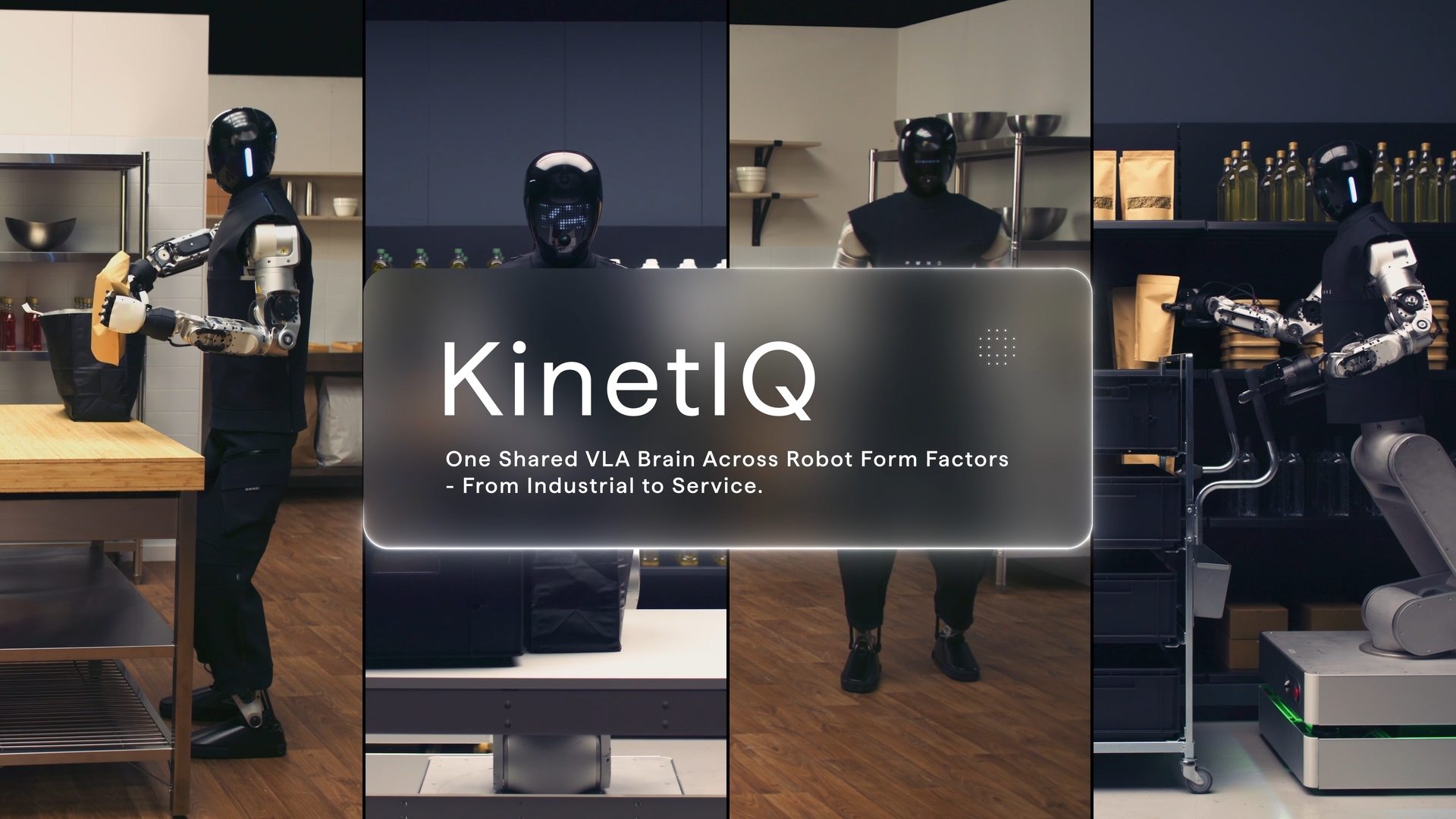

KinetIQ

Introducing KinetIQ

Today we’re introducing KinetIQ, Humanoid’s own AI framework for end-to-end orchestration of humanoid robot fleets across industrial, service, and home applications. A single system controls robots with different embodiments and coordinates interactions between them.

The architecture is cross-timescale: four layers operate simultaneously, from fleet-level goal assignment to millisecond-level joint control. Each layer treats the layer below as a set of tools, orchestrating them via prompting and tool use to achieve goals set from above. This agentic pattern, proven in frontier AI systems, allows components to improve independently while the overall system scales naturally to larger fleets and more complex tasks.

Our wheeled-base robots run industrial workflows: back-of-store grocery picking, container handling, and packing across retail, logistics, and manufacturing. The bipedal robot is our R&D platform for service and home, showcasing voice interaction, online ordering, and grocery handling as an intelligent assistant.

Cross-embodiment

A single AI model can control robots with different morphologies and end-effector designs. Data collected on one embodiment helps train and improve performance across the fleet.

Cross-timescale

KinetIQ simultaneously operates across several cognitive layers, each running at its own timescale, both in terms of the decision-making frequency and planning horizon.

System 3 — Humanoid AI Fleet Agent

An agentic AI layer that treats each robot as a tool and reacts within seconds to use them and optimize fleet operations.

System 3 integrates with facility management systems across logistics, retail and manufacturing, and is also applicable to service scenarios and smart-home coordination. Our KinetIQ Agentic Fleet Orchestrator ingests task requests, expected outcomes, SOPs, real-time request updates and facility context, and allocates tasks and information across wheeled and bipedal robots, coordinating robot swaps at workstations, to maximize throughput and uptime.

The KinetIQ Fleet Orchestrator directs two-way communication with facility systems to:

- receive new task requests and changes/reassignments,

- track task progress and performance metrics,

- report completion and issues,

- ensure exceptions are handled and resolved in coordination with traditional or agentic facility management systems.

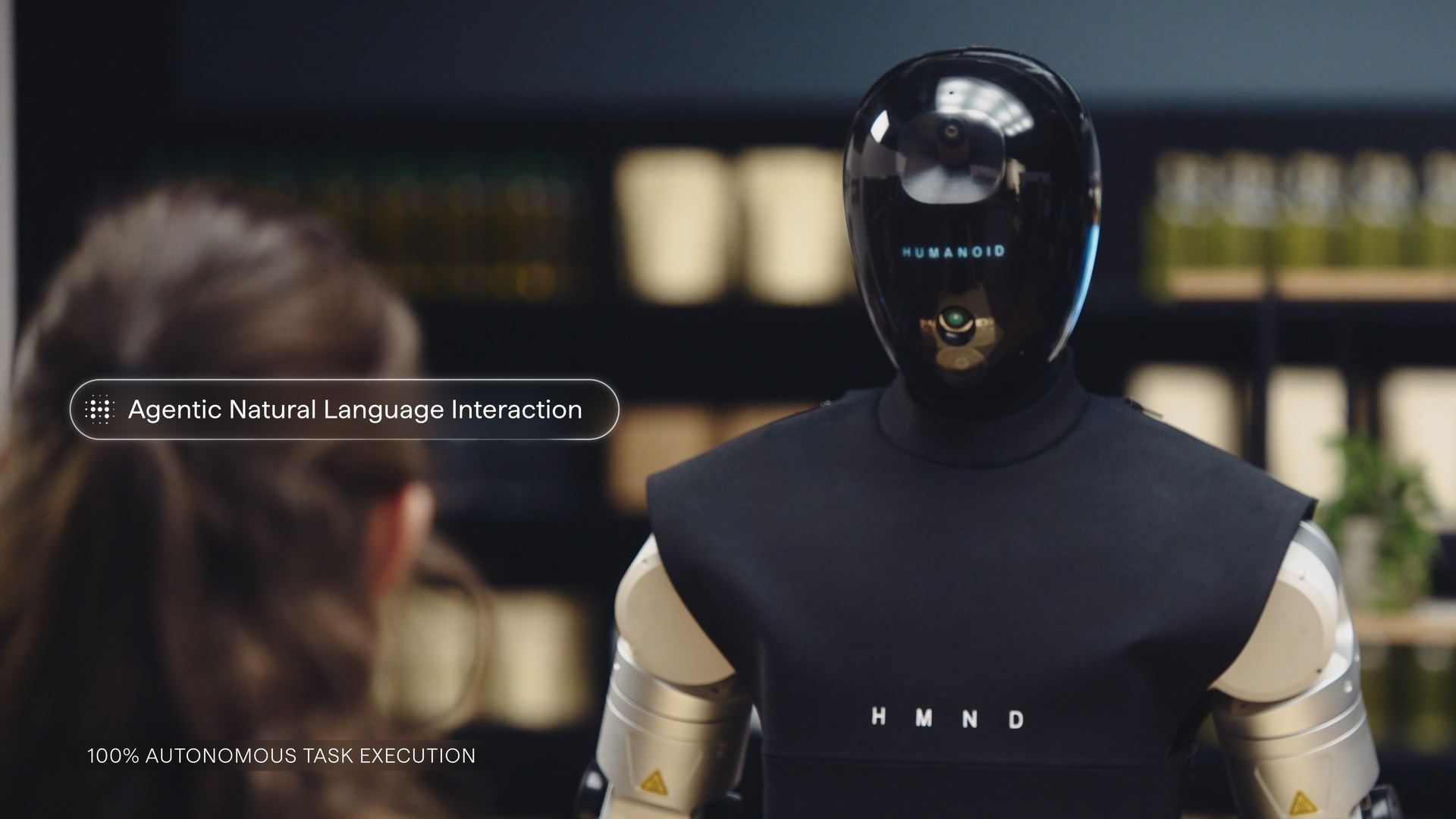

System 2 — Robot-Level Reasoning

A robot-level agentic layer that plans interactions with the environment to achieve goals set by System 3. Spans second to subminute timescale.

System 2 uses an omni-modal language model to observe the environment and interpret high-level instructions from System 3. It decomposes goals into sub-tasks by reasoning about the required actions to complete its assignments, as well as the best sequence and approach. Plans are updated dynamically from visual context instead of relying on fixed, pre-programmed sequences, similar to how agentic systems select and sequence tools. These plans can be saved as workflows/SOPs to be executed again in the future and shared across the fleet.

System 2 also monitors execution and evaluates whether the VLA (System 1) is making progress. If the system determines that it’s unable to complete a task, or needs assistance, it requests human support through the fleet layer (System 3). Assistance can be delivered via interventions at System 2 level (through prompting) or at the level of System 1 (through teleoperation or direct joint control), either remotely or on-site.

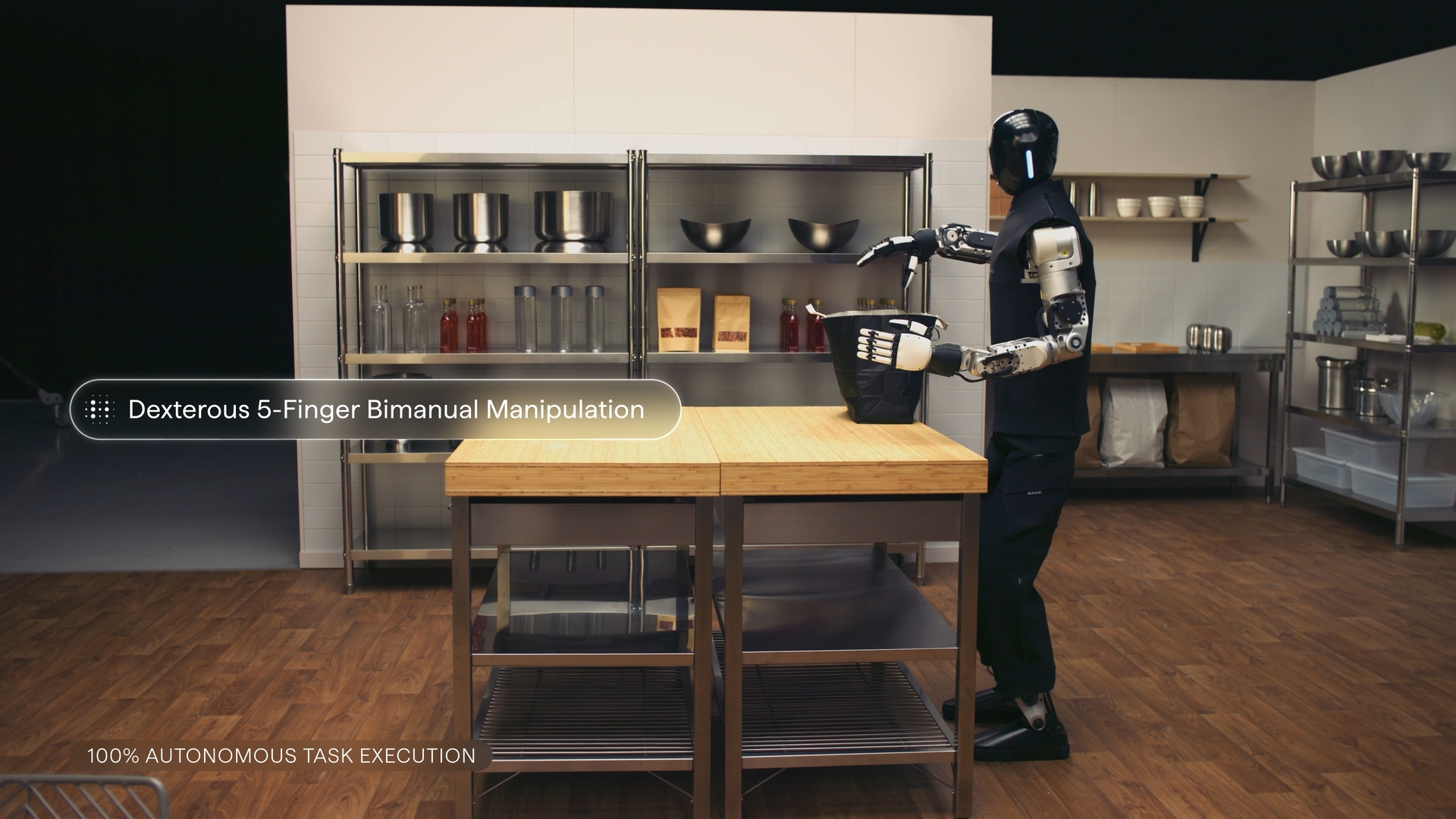

System 1 — VLA-Based Low-Level Task Execution

A Vision-Language-Action (VLA) neural network that commands target poses for a subset of robot body parts (such as hands, torso or pelvis), driving progress toward immediate low-level objectives set by System 2.

System 1 exposes multiple low-level capabilities to System 2 that can be invoked via different prompts. Examples include picking & placing objects, manipulating containers, packing or locomoting. VLM-based reasoning of System 2 selects the capability most appropriate for the current situation and the goal. Each low-level capability is also capable of reporting its status (success, failure or in-progress) back to System 2 to facilitate progress tracking.

KinetIQ VLA issues new predictions at subsecond timescale, usually 5-10Hz. Each prediction constitutes a chunk of higher-frequency actions (30 to 50Hz depending on the task) that will be executed by System 0. Action execution is fully asynchronous: a new action chunk is always being prepared while the previous one is still executed.

To ensure that an asynchronously produced chunk doesn’t contradict the reality that unfolded while it was produced, KinetIQ uses the prefix conditioning technique: every chunk prediction is conditioned on the part of the previous chunk that is expected to be executed during inference. Unlike impainting, this is a universal technique equally applicable to both autoregressive and flow-matching models.

System 0 — RL-based Whole-Body Control

The goal of System 0 is to achieve pose targets set by System 1, while solving for the state of all robot joints in a way that continuously guarantees dynamic stability. System 0 runs at 50 Hz.

KinetIQ implementation of System 0 uses RL-trained whole-body control for both bipedal and wheeled robots. Such approach allows KinetIQ to fully exploit synergy between different platforms, benefitting from the power of RL in producing capable locomotion controllers.

Whole body control is trained solely in simulation with online reinforcement learning, requiring roughly 15k hours of experience to produce a capable model.

The Path Toward Solving Physical AI

Working in unison across multiple embodiments and timescales, the four cognitive layers of KinetIQ can achieve complex goals that require fleet orchestration, reasoning, dexterous manipulation, dynamic recovery and stability control. The fully-agentic design of KinetIQ that embraces recent breakthroughs in the field of AI is one of the key factors behind Humanoid’s rapid progress towards solving Physical AI.